Pinch and a punch?

What a week ;)

Meme break

Recent food for thought

🤘 (un)supervised learning is always free- paid subscriptions go towards producing content. Cheers!

I recently recorded a podcast with Head of Applied AI at Databricks- Ritendra Datta.

We discussed surprising parallels between robot motion planning and the way AIs learn complex tasks.

Turns out those aren't as different as you might think.

Advanced motion planning means robots need to factor in

Their own physical limitations (joint angles, speed limits)

Uncertainty in the environment (a box might shift, a person might walk by)

Finding the "smoothest" path, not just any path, for delicate tasks.

AIs mastering complex skills use a technique called reinforcement learning.

How is it similar?

The AI tests different actions (like a robot trying arm movements).

It gets a "reward" based on the outcome (did the robot reach the cup?)

Over time, it refines its strategy to maximise those rewards.

Both robots and AIs face unpredictable situations. A good motion planner lets the robot adjust in real-time. A well-trained AI tweaks its actions based on new data. Neither has all the answers in advance!

More on this in upcoming podcast ep.

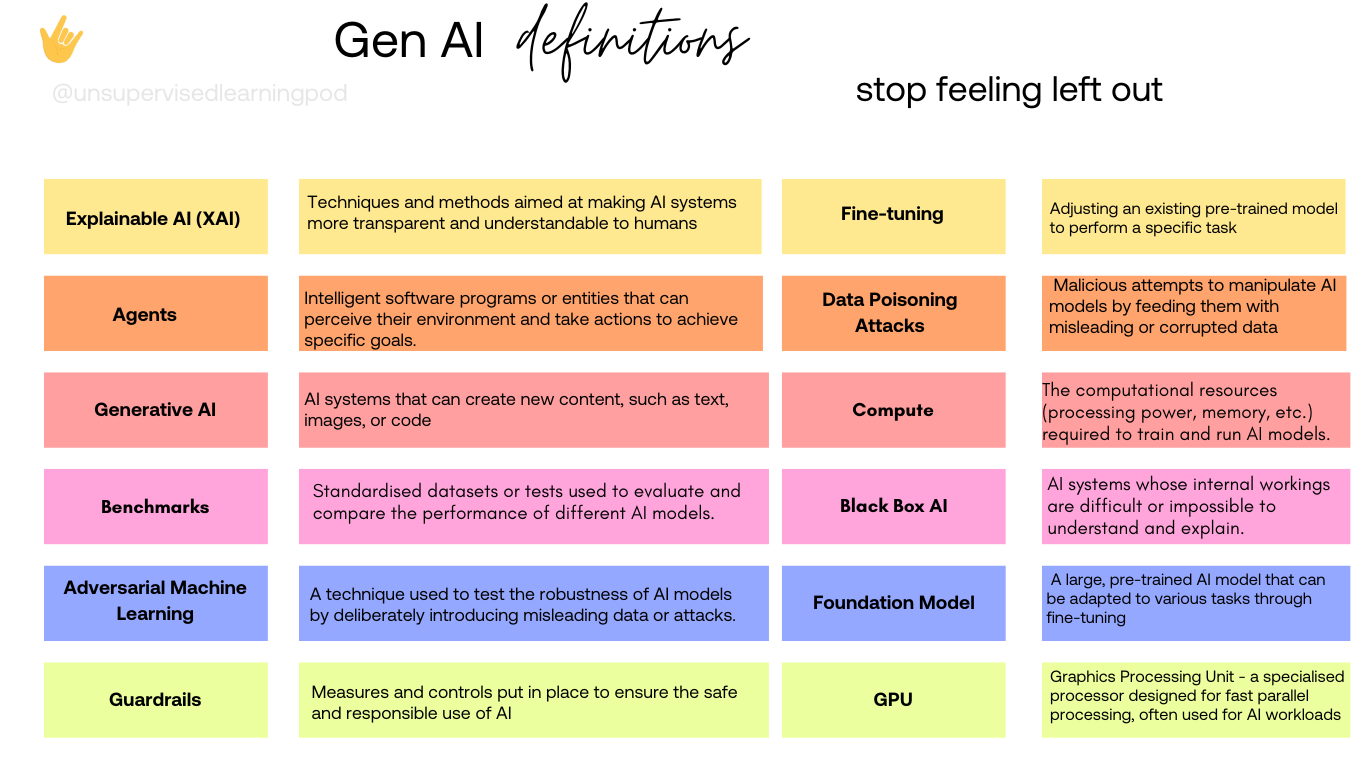

Cheat sheet for GenAI terms

What we’ve learned🤓

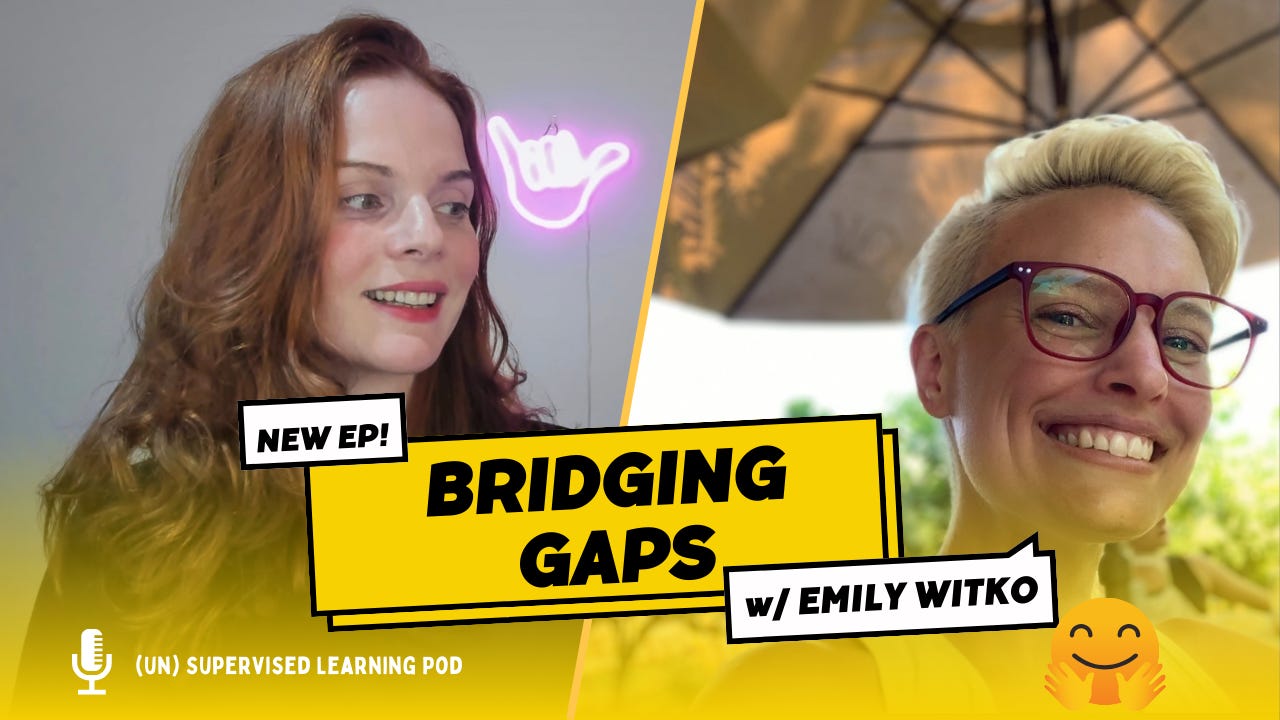

New podcast with Emily Witko of HuggingFace, has dropped (Youtube, Spotify, Apple, and Substack).

In this episode Emily Witko discusses their role at AI startup darling, Hugging Face, and what working in a deeply technical field looks like for someone with a "non-technical" background.

The quick learn- Neural networks

here’s the tiktok version

@unsupervisedlearningpodFeeding the algo #ai #education #wfh Imagine you're exploring a spider's web, intricate and complex. Each strand in this web is like a neuron or node, the fundamental unit in a neural network. These neurons are not just mere threads; they are pathways for information, carrying varied levels of significance, determined by what we call "weights." These weights decide how vital each connection is within the network. So you show this web a photo of a cat. How does it respond? The network is structured in layers. The first layer deals with the basics – it takes in the raw data, in this case, the pixels of your cat photo. Next come the hidden layers, the real maestros of this symphony. They work hard, analysing shapes, edges, and textures. But how does this web of neurons learn to identify cats, or anything else for that matter? The process is like feeding a curious mind a constant stream of knowledge. We provide the network with numerous examples – pictures of cats, dogs, birds, and so forth, each time telling it what it's looking at. Then comes the fine-tuning. The network makes a guess, and if it's off the mark, it adjusts its weights. This is like tweaking the tension in each strand of the web, strengthening some connections while weakening others. As with any skill, practice makes perfect. The more examples the network processes, the better it gets at its job. Eventually, it reaches a point where it can identify new pictures of cats that it has never encountered before – a testament to its learning capabilities. Now, let's talk about the real-world magic these neural networks bring to life. They're the wizards behind image recognition – think of how your smartphone organises your photo gallery or how self-driving cars navigate busy streets. Then there's language understanding. These networks are why translation services are getting more accurate and chatbots are becoming more conversational. They're also masters of prediction, powering systems like Spotify's music recommendations. The roots of neural networks trace back to 1944, inspired by the workings of the human brain. Yet, their true potential is only being unravelled now, thanks to advancements in technology and computing power. From their humble beginnings as theoretical constructs, neural networks have evolved into the driving force behind some of the most cutting-edge technologies we see today. We're looking at a world where AI could assist in medical diagnoses, help in environmental conservation, and even further the exploration of space.

Tiktok failed to load.

Enable 3rd party cookies or use another browser

Imagine a giant spiderweb of knowledge

Those nodes? Called "neurons" (or nodes), the basic building blocks of a neural network.These are like the pathways information travels on. Each thread has a "weight" that determines how important that connection is.Think of this as the question you ask the spiderweb. Maybe you show it a picture of a cat.The spiderweb is organised in layers. The first layer takes in the raw information (pixels of the cat picture). Hidden layers in the middle process it, figuring out shapes, edges, etc. The final layer produces an answer – "Yes, this is a cat!" (we’ve spoken about this before).You feed the network lots of examples (pictures of cats, dogs, birds). With each example, you tell it the right answer.The network compares its answer to the correct one. If it's wrong, it changes the "weights" on those threads, making some connections stronger or weaker.Getting better with practice The more examples it sees, the smarter the network gets. Eventually, it can recognise new cat pictures it has never seen before!

Things neural nets power….

Image recognition

That's how your phone sorts photos or a self-driving car sees the road.

Language understanding

Translating between languages, chatbots getting better at conversations.

Making predictions

(think Spotify recommender system)

The basics of neural networks were thought of back in 1944, inspired by how our brains work!

Quick news🗞️

I want to give a shoutout to Jeez AI. They're doing some really great community building in the machine learning and robotics space to support graduates and early-career professionals.

Speaking of community- the (un)sueprvised learning community is still building out content, folks to educate and meetups.

Join here

Curious about past episodes?

-Mike Bird, Technical Community Manager at OpenInterpreter- spoke about alternatives to openai’s code interpreter

-Kyle Legare and I spoke about Side projects with local ai- home automation and Vancouver AI meetup scene

- Daniel Han and I spoke about GPUs, training LLMs more efficiently with Unsloth.ai

-Alex and I spoke about decentralised AI and reducing costs with openrouter

Ways to help out

Share 🤘 (un)supervised learning. When you use the referral link below, or the “Share” button on any post, you'll get credit for any new subscribers (plus stuff gets read!)

Recommend someone for the pod- Have someone in mind who’s doing cool things across AI or opensource? Send them this way!

Follow and rate and all that jazz- Spotify, Youtube and Apple podcasts are a learning curve but I know the algo beast loves a rating, yours helps!

Cheers,

Renee

🤘 (un)supervised learning is always free- paid subscriptions go towards producing content. Cheers!

Member discussion